-

|

Hey there, |

Beta Was this translation helpful? Give feedback.

Replies: 1 comment 2 replies

-

|

Hello garaujo23! The model that you linked include indeed a softmax in the end, so you don't need to apply it after, you can use the output right away. Regarding the problem that you have in your softmax implementation (that is not needed in this case), you need to be careful as the output vector is an unsigned numpy array. Subtracting np.max(x) will make most of the values go in underflow, this then causes the overflow during the exp operation. You should convert the output array into a signed int before proceeding. |

Beta Was this translation helpful? Give feedback.

Hello garaujo23!

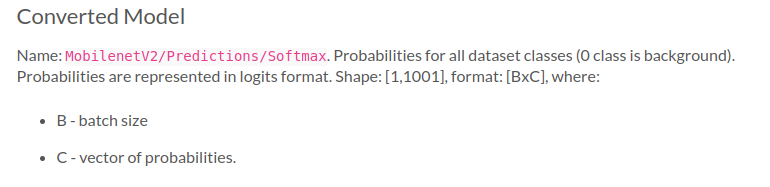

The model that you linked include indeed a softmax in the end, so you don't need to apply it after, you can use the output right away.

Regarding the problem that you have in your softmax implementation (that is not needed in this case), you need to be careful as the output vector is an unsigned numpy array. Subtracting np.max(x) will make most of the values go in underflow, this then causes the overflow during the exp operation. You should convert the output array into a signed int before proceeding.