-

Notifications

You must be signed in to change notification settings - Fork 30

Home

Welcome to the tensorflow-kubernetes-art-classification wiki!

Classify Art using TensorFlow model on Kubernetes

Train an image classification model using TensorFlow and running on a Kubernetes cluster.

Cognitive

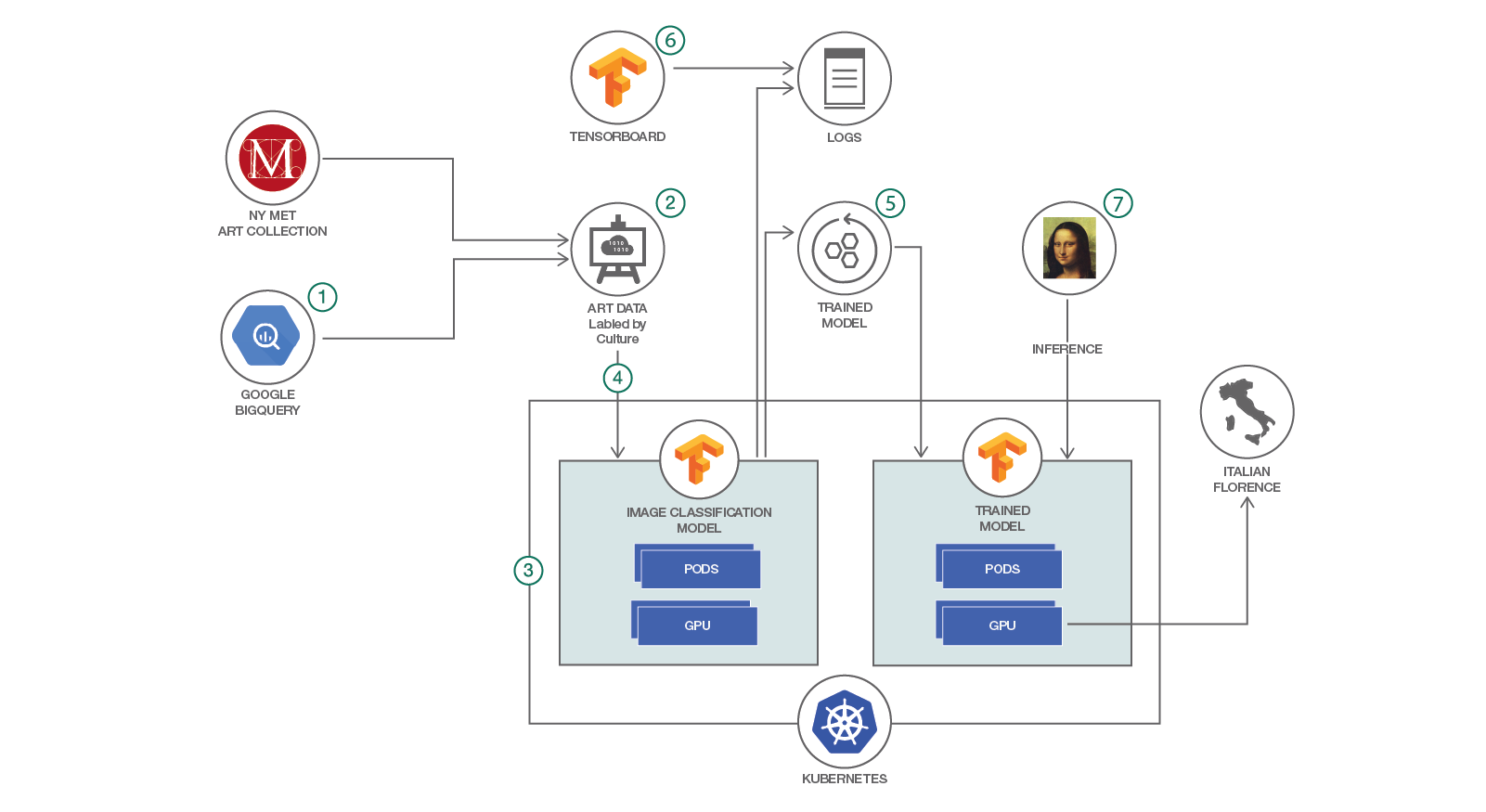

This Code Pattern shows how to build your own data set and train a model for image classification. The models are available in TensorFlow and are run on a Kubernetes cluster. The demonstration code pulls data and labels from the New York Metropolitan Museum of Art website and Google BigQuery. IBM Cloud container service provides the Kubernetes cluster. Developers can modify the code to build different image data sets and select from a collection of public model such as Inception, VGG, Resnet, Alexnet, Mobilenet, etc.

By Ton Ngo, Winnie Tsang

https://github.com/IBM/tensorflow-kubernetes-art-classification

N/A

In this Code Pattern, we will use Deep Learning to train an image classification model. The data comes from the art collection at the New York Metropolitan Museum of Art and the metadata from Google BigQuery. We will use the Inception model implemented in TensorFlow and we will run the training on a Kubernetes cluster. We will save the trained model and load it later to perform inference. To use the model, we provide as input a picture of a painting and the model will return the likely culture, for instance Italian Florence art. The user can choose other attributes to classify the art collection, for instance author, time period, etc. Depending on the compute resource available, the user can choose the number of images to train, the number of classes to use, etc. In this journey, we will select a small set of image and a small number of classes to allow the training to complete within a reasonable amount of time. With a large data set, the training may take days or weeks.

When the reader has completed this Code Pattern, they will understand how to:

- Collect and process the data for Deep Learning in TensorFlow

- Configure and deploy TensorFlow to run on a Kubernetes cluster

- Train an advanced image classification Neural Network

- Use TensorBoard to visualize and understand the training process

- Inspect the available attributes in the Google BigQuery database for the Met art collection

- Create the labeled dataset using the attribute selected

- Select a model for image classification from the set of available public models and deploy to IBM Cloud

- Run the training on Kubernetes, optionally using GPU if available

- Save the trained model and logs

- Visualize the training with TensorBoard

- Load the trained model in Kubernetes and run an inference on a new art drawing to see the classification

- TensorFlow: An open-source library for implementing Deep Learning models

- Image classification models: an implementation of the Inception neural network for image classification

- Google metadata for Met Art collection: a database containing metadata for the art collection at the New York Metropolitan Museum of Art

- Met Art collection: a collection of over 200,000 public art artifacts, including paintings, books, etc.

- Kubernetes cluster: an open-source system for orchestrating containers on a cluster of servers

- IBM Cloud Container Service: a public service from IBM that hosts users applications on Docker and Kubernetes

- TensorFlow: Deep Learning library

- TensorFlow models: public models for Deep Learning

- Kubernetes: Container orchestration

Visualizing High Dimension data for Deep Learning

-

IBM Cloud Container Service: A public service from IBM that hosts users applications on Docker and Kubernetes

-

TensorFlow: An open-source library for implementing Deep Learning models

-

Kubernetes cluster: An open-source system for orchestrating containers on a cluster of servers

-

New York Metropolitan Museum of Art: The museum hosts a collection of over 450,000 public art artifacts, including paintings, books, etc.

-

Google metadata for Met Art collection: A database containing metadata for over 200,000 items from the art collection at the New York Metropolitan Museum of Art

-

Google BigQuery: a web service that provides interactive analysis of massive datasets

-

Image classification models: An implementation of the Inception neural network for image classification

-

title: description